Design Distributed Message Queue - System Design Interview

TO DO: leaky bucket rate limit control bulkhead pattern and circuit breaker pattern

Problem Statment

Two web services, producer and consumer, need to communicate with each other.

Synchronous communcation is easy to implement but hard to deal with consumer service failures.

Potential problem includes:

- how to retry failed request

- how to control send speed

- hwo to deal with slow consumer

We need to build a queue to achieve asynchronous communcation.

For a queue, messages are received by only one consumer while topic messages will be received by each and all subscribers.

Functional Requirements

- sendMessage(messageBody)

- receiveMessage()

others that are not included here may be, createQueue() api, deleteMessageAPI()

Non-Functional Requirements

- Scalable(handles load increases, more queues and messages)

- Highly Available(survives hardware/network failrues)

- Hightly Performant(single digit latency for main operations)

- Durable(once submitted, data is not lost)

others may include throughput, SLA, cost requirements

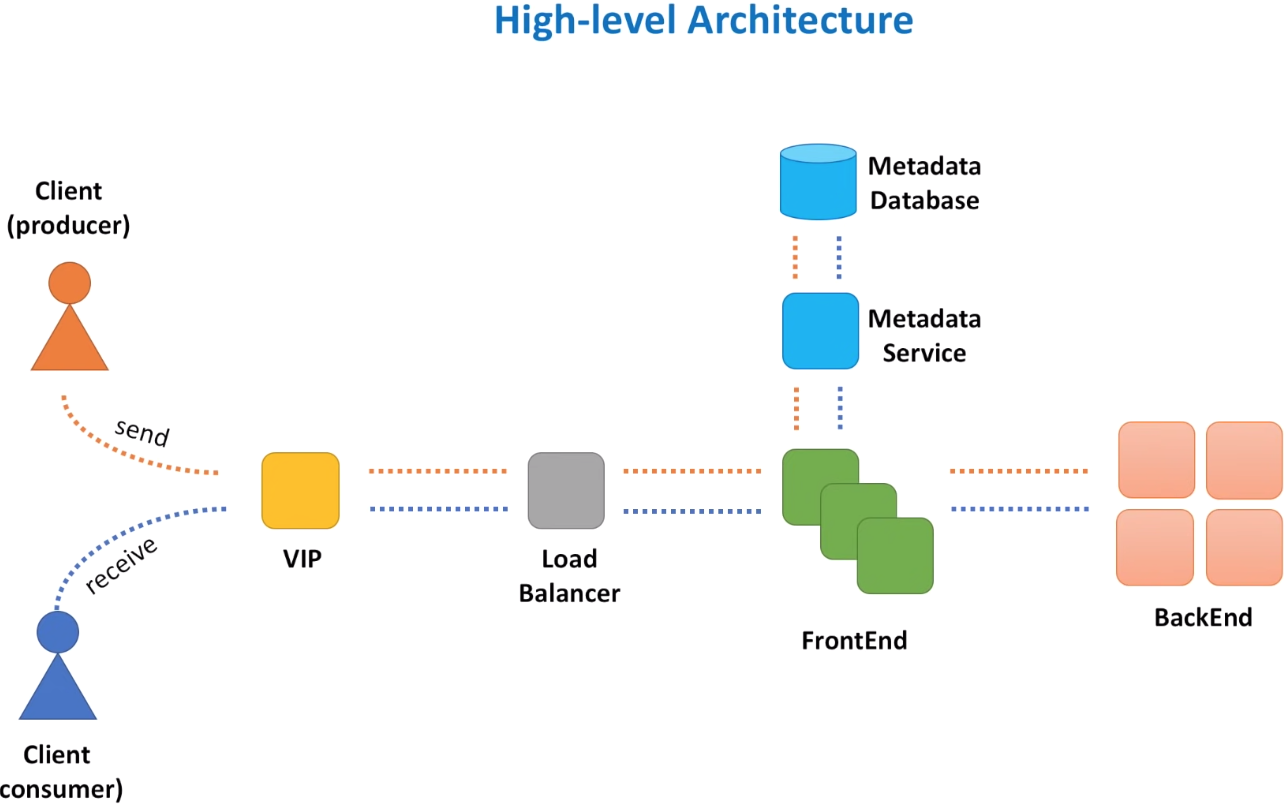

High Level Architecture

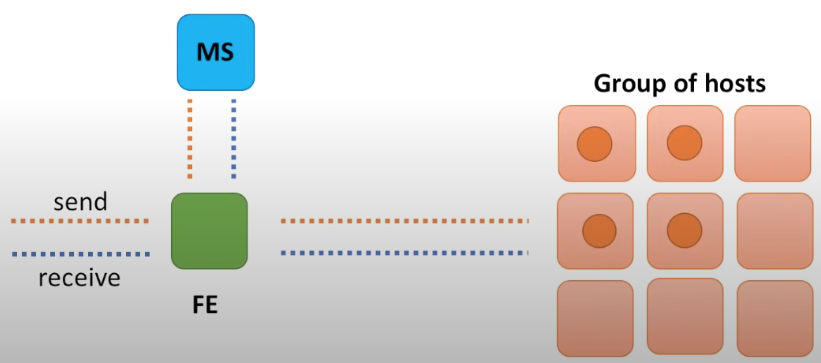

VIP: symbolic hostname resovled to load balancers Load balancer: route requests across a series of servers FrontEnd Service: component to address initial request processing, like validation, authentication Metadata Database: save metadata for queues, like owner, creation time Metadata Service: Facade to metadata database BackEnd service: messages persistence

VIP and Load balancer

The load balancer can be single point of failure. To address this issue, we can have primary/secondary nodes setup for load balancer. The secondary node keeps monitoring primary node and will take over if primary node is down.

The load balancer can be a bottleneck too. In this case, a concept of multiple VIPs or VIP partitioning, can used to address this utilized. In DNS, we assign multiple A record to the same DNS name for the service.

The “A” stands for “address” and this is the most fundamental type of DNS record: it indicates the IP address of a given domain. It’s possible to have multiple A records for a given domain to achieve a technique called

round robin load balancing, which can distribute request traffic to one of several IP addresses, each hosting identical content.

Round-robin DNS can be used when a website or service has their content hosted on several redundant web servers; when the DNS authoritative nameserver is queried for an IP address, the server hands out a different address each time, operating on a rotation. This is particularly useful when the redundant web servers are geographically separated, making traditional load-balancing difficult.

The round-robin method doesn’t always provide evenly-distributed load balancing because of both DNS caching and client-side caching. If a user makes a DNS query to a particularly high traffic recursive resolver for a particular website, that resolver will cache the website’s IP, potentially sending a heavy amount of traffic to that one IP.

Another drawback is that round-robin cannot be depended upon for site reliability; if one of the servers goes down, the DNS server will still keep that server’s IP in the round-robin rotation. So if there are 6 servers and one is taken offline, one in six users will be denied service. In addition, round-robin DNS does not account for server load, transaction time, geographical distance, and other factors that traditional load balancing can be configured for.

By spreading load balancers to multiple data centers, we address both scalability and performance issue.

FrontEnd Service

- A lightweight web service

- stateless service deployed across several data centers

Frontend Service Actions:

- Request validation

- check that parameters are existing and values are valid, e.g. message size doesn’t exceed size limit

- Authentication/authorization

- TLS(SSL) termination

- refers to the process of decrypting request passing on decrypted request to backend service

- it’s expensive to do TLS termintaion on load balancer

- the termination is usually handled by a separate TLS HTTP proxy running a process in the same host

- Server side encryption

- Messages are encrypted as long as FrontEnd service receives them and only decrypted before returning to consumer

- Caching

- Rate limiting(throttling)

- Throttling is the process of limiting the number of request you can submit to a given operation within a given period of time

- It protects the service from being overwhelmed

- Leaky bucket algorithm is the most famous

- Request dispatching

- responsible for all activities associated with sending request to backend service (client management, response handling, resource isolation)

- bulkhead pattern helps to isolate elements of an application into pools so that if one fails, the others will continue to function

- circuit breaker pattern prevents an application from repeatedly trying to execute an operation that’s likely to fail

- Request deduplication

- cache is usually used to save prevously seen request ids

- Usage data collection

- gathering usage information for auditing purpose

Metadata Service

- Saving queues to their backend clusters mapping, e.g. for a given queueId, find out the cluster that the queue is in

- Many reads, little writes only when new queues are created.

- It should have cache

Backend Service

Where and how do we store messages

- database is not a good option because the throughput of distributed message queue is expected high. If using database, we need to build a distributed database that can handle high throughput, it’s not easy. There are scalable distributed database existing. It’s OK to say using them if you’re interviewing a junior position but not the case for senior developer.

- We’ll use memory and local disk (file system) to store messages.

- How do we replicate data?

- replicate within a group of hosts

- database is not a good option because the throughput of distributed message queue is expected high. If using database, we need to build a distributed database that can handle high throughput, it’s not easy. There are scalable distributed database existing. It’s OK to say using them if you’re interviewing a junior position but not the case for senior developer.

How backend service is working in details?

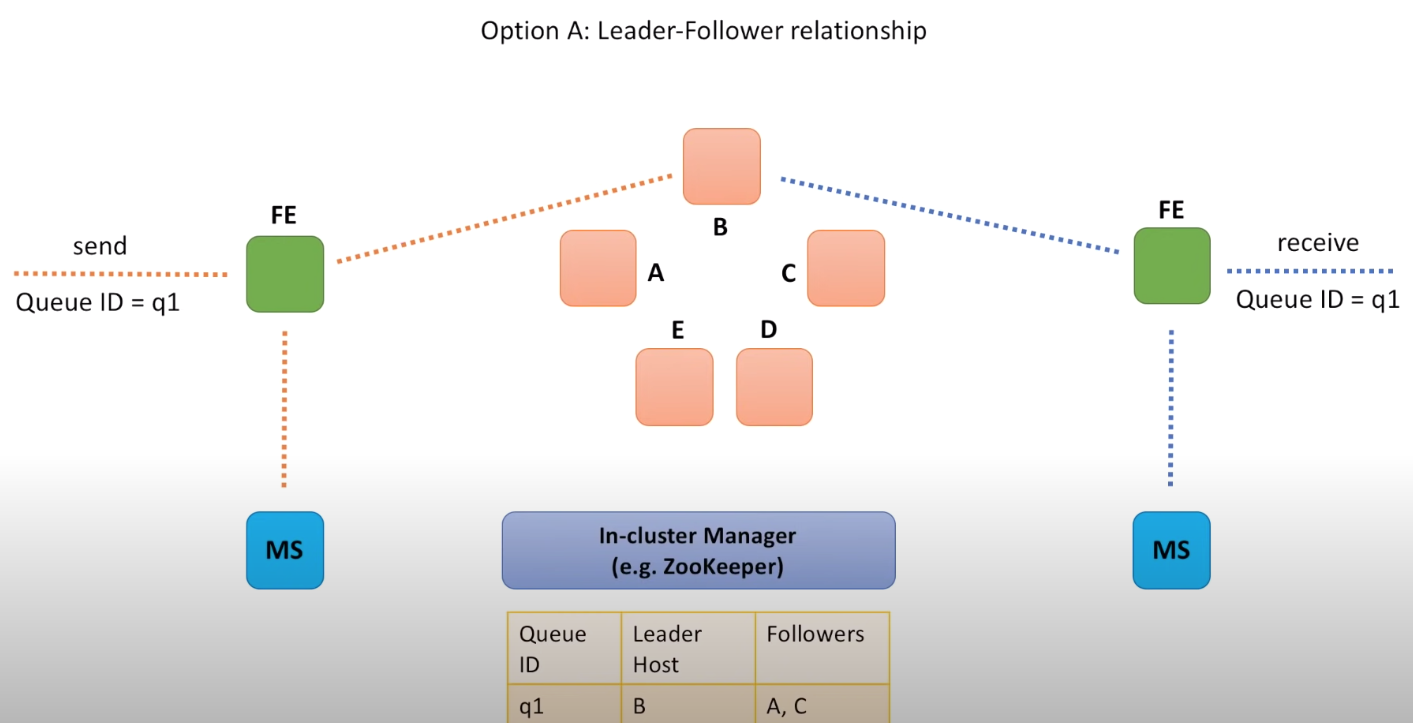

Option1 clusters are using leader-follower pattern, the drawback is that it’s hard to implemente highly scalable and available in cluster manager

- the message is first sent to the leader node and it’s leader node that is reponsible of replicating the message to follower nodes

- the message is first sent to the leader node and it’s leader node that is reponsible of replicating the message to follower nodes

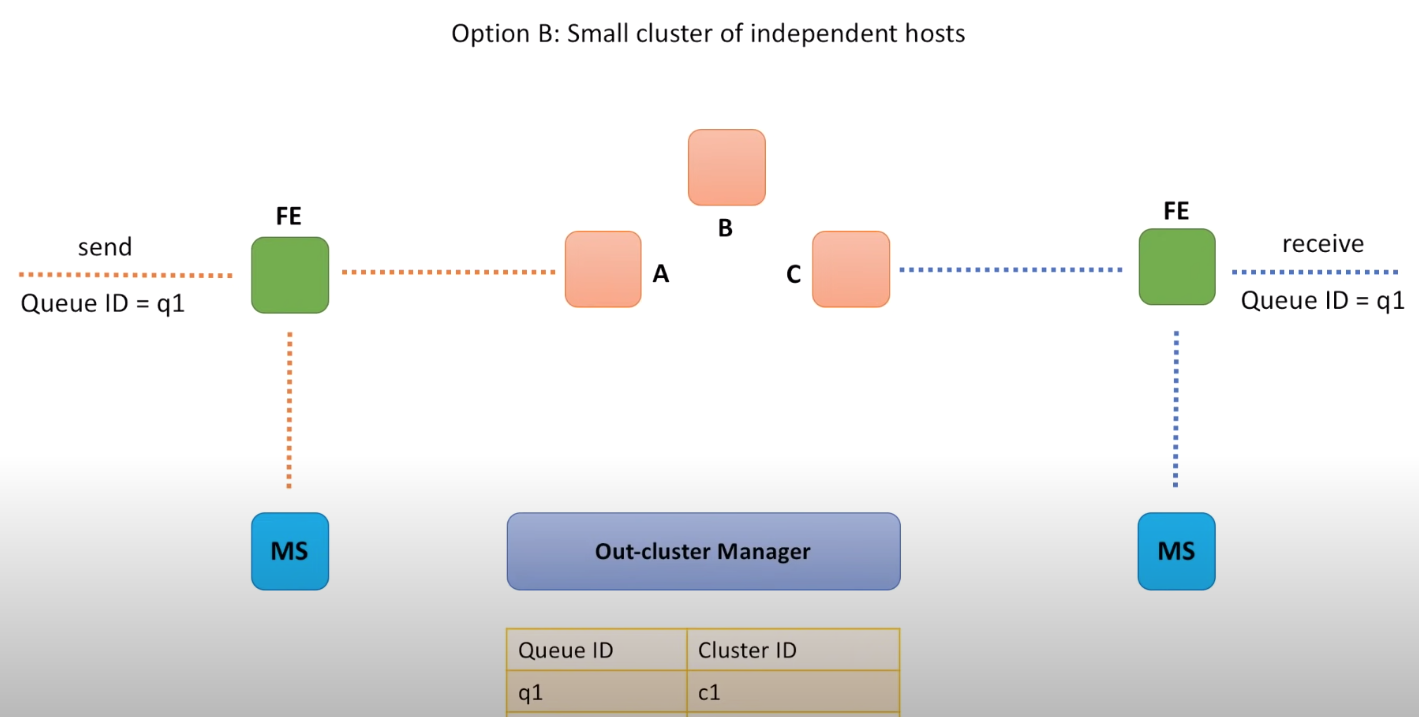

option2 is have small cluster with independent nodes, namely, all nodes are of same level. No leader or follower. Messages are first sent to a random node and the node is responsible of replicating messages to the rest of nodes. For messages clean up, we get the message from a random node and the node is responsbile of cleaning up the message from the rest of nodes.

- with this setup, we no longer need to have component for leader election. But we still need a component to maintain the queue to cluster mapping.

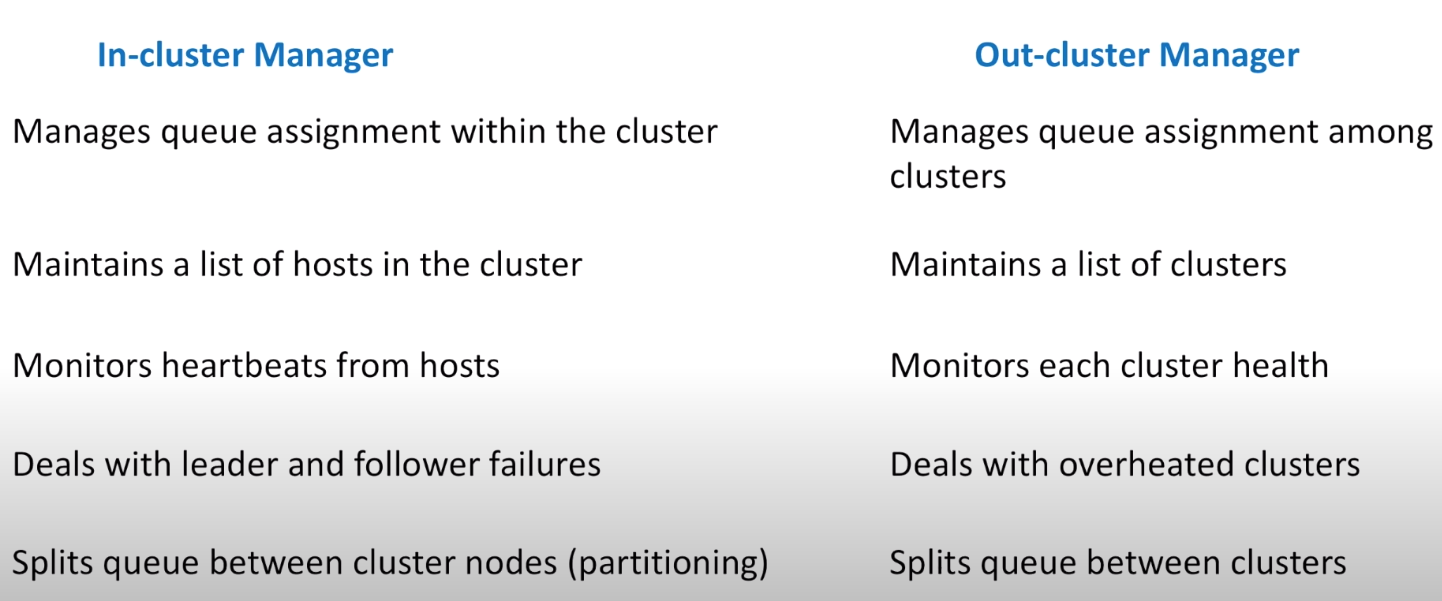

In cluster manager vs out-of cluster manager

What else are important?

- Queue creation and deletion

- Having API for queeu creation but not expose API for queue deltetion. Providing command line utility for admin users to delete queue.

- Message deletion

- Option 1, not deleting messages after they’re consumed and consumer is responsible of tracking which messages are consumed. It’s not easy though because we need to track the offset of current mesage in the queue and having scheduled job to delete them later. Apache Kafka is using this strategy

- option2, mark message as invisible after they’re consumed. Consumer needs to call API to delete messages after consumed the messages. If messages are not deleted by consumer, it’ll become visible later and have consumers to process them again.

- Message replication

- sync vs async relication, the trade off is latency vs durability

- Message delivery semantics

- delivering at least once, at most once and exactly once (which is hard to achieve in practical)

- push vs pull mode, pull is easy to implement than pushing but consumers will need to do more work

- FIFO

- Security

- Monitoring

Overall architecture

Appendix

Bulkhead pattern

The Bulkhead pattern is a type of application design that is tolerant of failure. In a bulkhead architecture, elements of an application are isolated into pools so that if one fails, the others will continue to function. It’s named after the sectioned partitions (bulkheads) of a ship’s hull. If the hull of a ship is compromised, only the damaged section fills with water, which prevents the ship from sinking.

Explaination

Partition service instances into different groups, based on consumer load and availability requirements. This design helps to isolate failures, and allows you to sustain service functionality for some consumers, even during a failure.

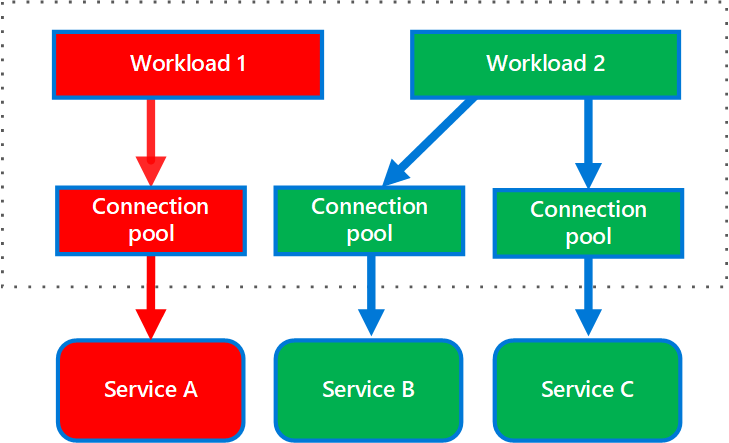

A consumer can also partition resources, to ensure that resources used to call one service don’t affect the resources used to call another service. For example, a consumer that calls multiple services may be assigned a connection pool for each service. If a service begins to fail, it only affects the connection pool assigned for that service, allowing the consumer to continue using the other services.

My summary

For clients, calling problematic services might take up and never release connection from connection pool which has impact on calling other services because connection pool resource is limited.

To solve it, create service-specific connection pool for each of downstream services so that the blast radius can’t limited.

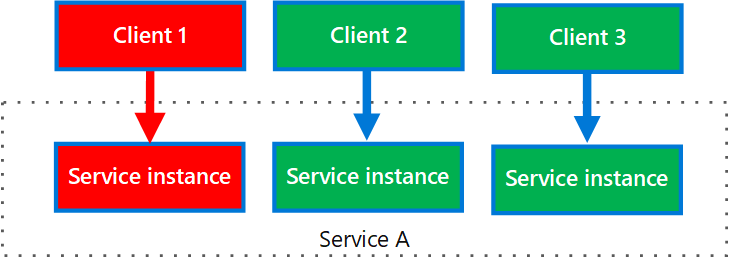

For service, responding one client might use a lot of resource and has impact on serving requests from other clients. A large number of requests originating from one client may exhaust available resources in the service. Other consumers are no longer able to consume the service, causing a cascading failure effect.

To solve it, creating client-specific instances so that one (or several) instances are just serving a certain client.

Benefits

The benefits of this pattern include:

- Isolates consumers and services from cascading failures. An issue affecting a consumer or service can be isolated within its own bulkhead, preventing the entire solution from failing.

- Allows you to preserve some functionality in the event of a service failure. Other services and features of the application will continue to work.

- Allows you to deploy services that offer a different quality of service for consuming applications. A high-priority consumer pool can be configured to use high-priority services.

The following diagram shows bulkheads structured around connection pools that call individual services. If Service A fails or causes some other issue, the connection pool is isolated, so only workloads using the thread pool assigned to Service A are affected. Workloads that use Service B and C are not affected and can continue working without interruption.

The next diagram shows multiple clients calling a single service. Each client is assigned a separate service instance. Client 1 has made too many requests and overwhelmed its instance. Because each service instance is isolated from the others, the other clients can continue making calls.